Are MVPs and Skateboards still needed in 2025?

As AI tools and LLMs transform not only how we write code but our entire product development lifecycle, we are faced with a fundamental question: are two of our core product delivery methodologies still relevant, or do they need reinvention?

TL;DR: Yes, they're still valid - but they're evolving in important ways.

A Note on What I’m Covering

Before diving in, I want to set some boundaries for the discussion. This article focuses specifically on how the MVP and skateboard frameworks apply in today's AI-enhanced world. I'm not attempting to cover the entire product development spectrum or reinvent product discovery methods.

There are excellent resources on comprehensive product discovery (Teresa Torres), opportunity mapping, and full-cycle product management (Marty Cagan). But here, I'm interested in these two specific models because, given their simplicity, they have proven remarkably durable, even as our tools and processes have transformed over time.

MVP and The Lean Startup

In the early 2010s, building on concepts from lean manufacturing and agile software practices, two frameworks emerged that would help define and in many cases simplify product development for the next decade.

In 2011, Eric Ries published "The Lean Startup," introducing a methodology framed around the core elements of:

- Experimentation

- Customer feedback

- Iteration

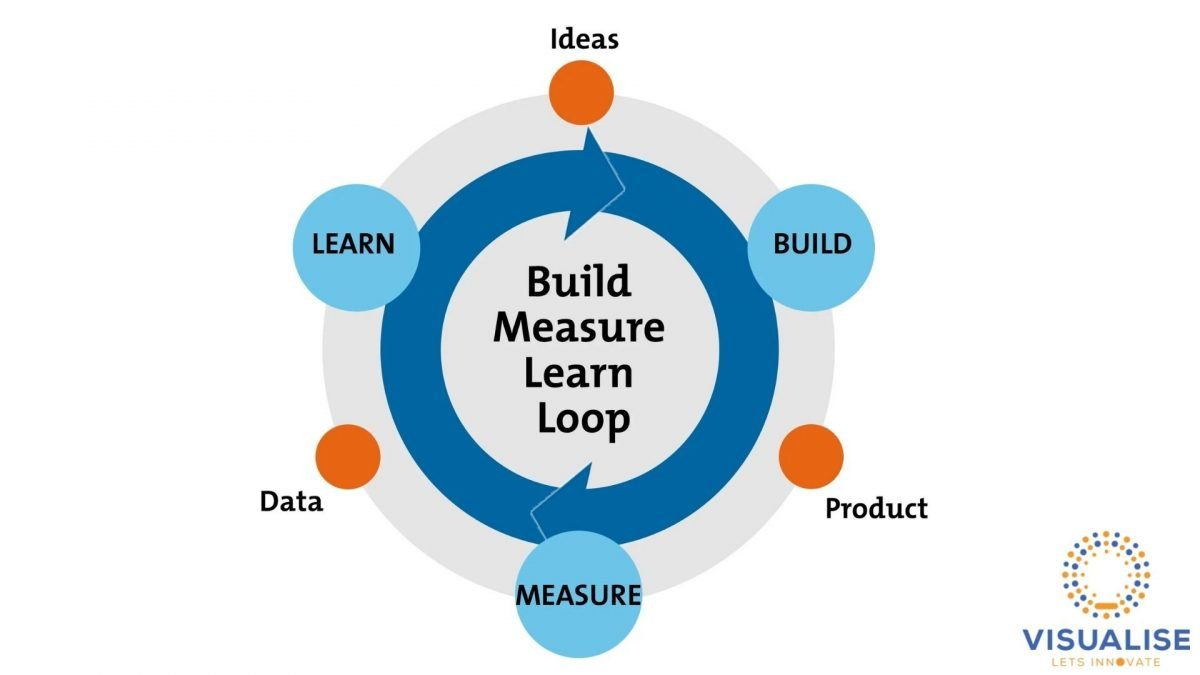

The method followed a simple “Build-Measure-Learn” Loop, for testing hypothesis:

- Idea → Start with a hypothesis about what customers need

- Build → Create a Minimal Viable Product(MVP) experiment to test that hypothesis

- Measure → Collect meaningful and customer-centric data about the hypothesis

- Learn → Extract insights from the data

- Refine / Pivot → Adjust the hypothesis based on learnings and repeat

Each iteration would give development teams the opportunity to validate assumptions, gain validated learning, and minimize waste by avoiding unnecessary work.

The Skateboard Analogy

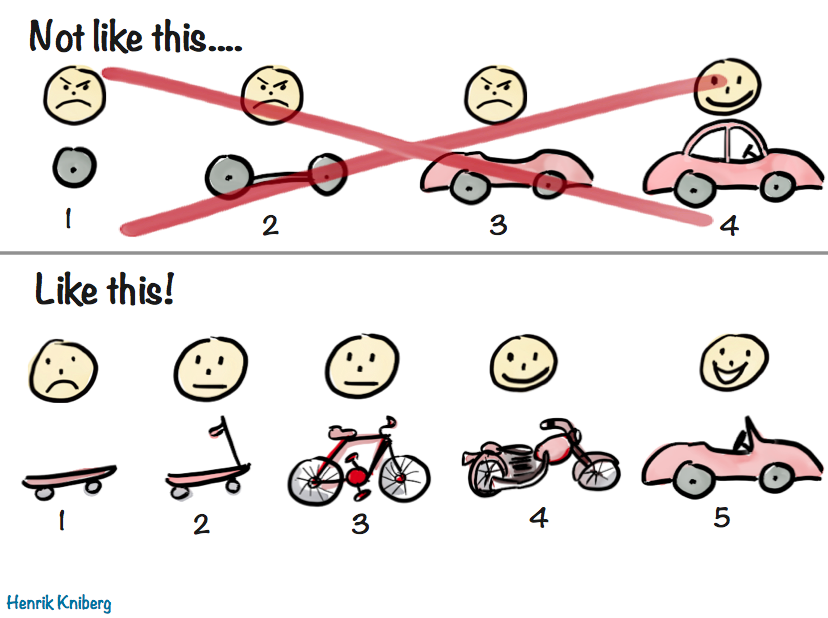

A few years later, Henrik Kniberg introduced the "Skateboard Model," which reinforced and visualized a similar concept. The core principle: deliver value early and often.

Kniberg explained that a customer waiting for a car won't be satisfied receiving components sequentially—first a wheel, then a chassis, then an engine.[Wheel] → [Chassis] → [Body] → [Engine] → [Car]

They can't use or evaluate these individual pieces. Instead, the skateboard model suggests delivering complete, though limited, products that solve a portion of the problem:Skateboard → Scooter → Bicycle → Motorcycle → Car

Where each iteration is:

- Functional — usable on its own

- Valuable — solves part of the problem

- User-focused — enables real feedback from actual usage

This approach not only validates assumptions early but allows for course correction. The customer might also realize earlier in the project that the motorcycle is actually sufficient for their needs, saving both time and resources.

This model shares many of the same concepts as Ries' MVP approach - it focuses on testing and validating assumptions early to ensure you're building something people actually want.

MVPs and Skateboards in 2025

In the time between 2023-2025, our development landscape transformed dramatically. Tools like Claude, ChatGPT, GitHub Copilot, Cursor and specialized AI agents aren't just available - they've become standard components in most engineering organizations' toolkit.

This shift raises important questions: When AI can generate working prototypes in minutes or hours rather than the weeks these used to take, does this change our approach to MVPs? And when these iterations can happen at previously unprecedented speed, how does this affect our learning cycles?

Despite these technological advances, I would argue that the core concepts for testing hypothesis remain unchanged.

- Validation - Confirming we're building something people actually want

- Measurement - Collecting meaningful data to inform decisions

- Learn/Pivot - Changing direction based on what we discover

In the same way, the development lifecycle for production-ready products still follows familiar phases like: requirements, design, implementation, QA, deployment. AI might accelerate, assist, and even greatly minimize these phases.

What has changed is how we execute these principles:

- Accelerated experimentation: What once took weeks can now be tested in days or hours, allowing for even more hypothesis testing before committing resources.

- Higher baseline quality: AI tools can generate reasonably polished "skateboards" with minimal effort, raising the bar for what constitutes a viable first iteration.

- Focus on unique value: With common components easily generated, teams can focus more energy on the truly differentiating aspects of their product.

- From sequential to parallel: Teams can now explore multiple paths simultaneously, creating various hypotheses to test different directions before committing to a particular path.

- Risk management enhancements: While AI tools speeds up development, it also allows us to identify and mitigate risks more thoroughly by simulating edge cases and generating test scenarios we might overlook.

Perhaps most importantly, the core philosophy of both models—learning quickly through real-world feedback—has become even more essential. In a world where anyone can quickly create basic solutions, the competitive advantage comes from how effectively you learn and adapt based on customer insights.

An AI-Enhanced MVP Process

Here's an example of how I have used AI tools to enhance each stage of an MVP process:

Idea Generation

- AI can help synthesize user research data to identify patterns

- Generate multiple hypotheses based on existing products and market data

- Assist in prioritizing which hypotheses are most critical to test

Build

- Generate functional prototypes directly from requirements using AI agents

- Create placeholder content, sample data, and documentation

- Implement standard patterns and components without manual coding

Measure

- Analyze user sessions and usage data to identify patterns

- Simulate certain types of user behaviour

- Process qualitative feedback at scale

- Generate insights from unstructured data like support tickets

Learn

- Identify correlations across different data points

- Generate potential explanations for unexpected results

- Compare outcomes against similar products or previous iterations

Refine

- Suggest modifications based on learnings

- Generate alternative approaches to test

- Implement changes rapidly for the next iteration

The result isn't the elimination of the MVP process, but an amplification. Teams can run through more cycles, with higher fidelity, in less time.

Accessibility of Experimentation

One of the more profound impacts of AI tools on the MVP model is the accessibility of experimentation. Previously, resource constraints meant that only larger organisations or teams could run multiple parallel experiments.

Today, AI tools dramatically reduces the cost of creating alternatives. This allows:

- Startups to compete more effectively with established players

- Product teams to explore more options before committing

- Organizations to maintain a diverse portfolio of POC solutions, for calls with potential customers.

This shift fundamentally changes the economics of experimentation, making the MVP approach even more powerful as a competitive advantage.

The Human Element

Despite these advantages, the human element remains crucial. AI tools excel at generating options and implementing solutions quickly, but they don't replace the intuition, empathy and strategic vision developed through years of experience, real conversations with users, understanding their pain points, and uncovering their needs.

The most effective teams use AI tools to handle the mechanical aspects of the MVP process while channeling human creativity and expertise into the elements where they create the most value.

Conclusion

The MVP and skateboard models weren't just products of their time. They established fundamental concepts about iterative product development that remain relevant regardless of technological shifts. They taught us to prioritize learning, value, and user feedback. Principles that are vital no matter our tools.

In 2025, with AI tools accelerating our ability to build and iterate, these frameworks haven't become obsolete; they've become even more essential to differentiate us from the competition.

The teams that will thrive in this new landscape are those who thoughtfully combine:

- The disciplined learning approach of MVPs & Skateboards

- The accelerated capabilities of modern AI tools

Together, these elements create a powerful synergy that allows teams to innovate faster while staying focused on delivering real value.

The skateboard is still the right metaphor, but now we can build it faster, make it sturdier, and try different wheel configurations before launching the final product.